Social Networks and VoIP control and surveillance

- pierrebernard3

- Mar 15, 2021

- 16 min read

1. Introduction:

Computer and network surveillance enable to monitor computers activities and data stored on a hard drive, but as well data being transferred over computer networks such as Internet. This monitoring is generally completed secretly by governments, corporations, or criminal organizations and individuals. It usually requires authorization from a court or other independent government agencies.

Almost all Internet traffic can be monitored via internet surveillance programs. Surveillance allows governments and other agencies to maintain social control, recognize and monitor threats, and prevent and investigate criminal activity.

With the advent of programs such as the Total Information Awareness program, technologies such as high-speed surveillance computers and biometrics software, and laws such as the Communications Assistance For Law Enforcement Act, governments now possess an unprecedented ability to monitor the activities of citizens.

However, civil rights and privacy groups express concern that increasing surveillance of citizens will result in a mass surveillance society, with limited political and/or personal freedoms.

2. Initial Phase:

This white paper focus on legal processes, leaving apart hacking of a Network leading to illegal network and VoIP Control.

The Government needs to have a legal environment obliging all fix and mobile public or private operators to ensure that all phone calls and broadband internet traffic (emails, web traffic, instant messaging, etc.) be available for unimpeded, real-time monitoring by authorized government Police and Security departments.

Nearly all government have obliged their operators to open their Telecom Operators Network to their Police/Secret Services/Military investigation departments. The operators are installing, usually via their equipment providers (NOKIA/SIEMENS/ERICSSON/HUAWEI/ZTE…) relevant monitoring.

The operators need to install a “Packet sniffing/capture” system intercepting the packet to enable the filtering of the information relevant to the control purpose.

Considering the huge number of information, the constraints of real time, data broadband speed, as well as the diversity of words, sentences, originators and destination groups of the messages specified in the filtering system, Big Data use cases and deployment are the usual solution.

The Big Data Use Cases are being specified by the Government instance in charge of the surveillance of specific types of communication, then implemented by specialized companies who are engineering and developing algorithms used in routines, but also coordinating various instances and tools providers (Google Analytics/AWS/…) for monitoring and filtering information, or data lake/cloud tools providers (IBM, TERA Data , …).

3. Business Intelligence and Companies internal control.

Business Intelligence is usually well known when related to monitoring of internet users related to their search on search engine, habits of internet purchase, etc… to anticipate their needs or to propose adapted advertising.

The same method applies to corporate surveillance which is already quite common from employers willing to discourage their employees of unproductive activities (such as internet shopping, streaming of movies, …) or unethical content scrolling.

It enables to verify employee’s observance of company networking policies or avoid confidential information to be shared with unauthorized personnel.

Such type of surveillance is mandatory within the government instances in charge of the country networks monitoring to avoid unethical use of the information collected, unauthorized publication of information, etc.

Internal control and employee surveillance should be accompanied with censorship, which is different from surveillance. If surveillance can be autonomous, not leading necessarily to censorship; censorship needs surveillance to be applied. Censorship can block the sending of certain message containing private information to non-authorized direction, block streaming of some video, the access to some sites of social networks, send warning message reminding the ethic company rules and the risks to breach the internal company laws. Such censorship usually results quickly in self-censorship of the employees.

4. Counter attacks on Controlled Networks and censorship.

a. Anonymizers (or anonymous proxy’s).

It is also important to understand the main possibilities offered to fight the Network control and Censorship. One of the most efficient way is to use anonymous proxy.

An anonymizer or an anonymous proxy is a tool that attempts to make activity on the Internet untraceable. It is a proxy server computer that acts as an intermediary and privacy shield between a client computer and the rest of the Internet. It accesses the Internet on the user's behalf, protecting personal information by hiding the client computer's identifying information.

The anonymizers have two main uses:

- Fight censorship from governments, allowing free access to all the internet content. It cannot help however against persecution for accessing the anonymizer website itself. Furthermore, as information itself about anonymizer websites are banned in those countries; users are wary that they may be falling into a government-set trap.

- Avoid business intelligence which is used to propose targeted information to some regions or countries (such as CNN is doing), or videos based on what you are sued to look at (such as YouTube does), etc.

An anonymous remailer is a protocol specific anonymizer server that receives messages with embedded instructions on where to send them next, and that forwards them without revealing where they originally came from. There are cypher-punk anonymous remailers, mix-master anonymous remailers, and nymph-servers, among others, which differ in how they work, in the policies they adopt, and in the type of attack on anonymity of e-mail they can (or are intended to) resist.

Protocol independence can be achieved by creating a tunnel to an anonymizer (Protocol Independent Anonymizer). The technology to do so varies. Protocols used by anonymizer services may include SOCKS, PPTP, or OpenVPN. In this case either the desired application must support the tunneling protocol, or a piece of software must be installed to force all connections through the tunnel. Web browsers, FTP and IRC clients often support SOCKS for example, unlike telnet.

b. Malicious software.

Another type of attack is to install surveillance program on a computer or network device that can search the contents of memories for suspicious data, can monitor computer use, collect passwords, and/or report back activities in real-time to its operator through the Internet connection. Keylogger is an example of this type of program. Normal keylogging programs store their data on the local hard drive, but some are programmed to automatically transmit data over the network to a remote computer or Web server.

This is the way used by some non-governmental organizations for instance Reporters without Borders, or Anonymous, to counterattack censorship, and publishing secret information from the censorship organizations.

There are multiple ways of installing such software. The most common is remote installation, using a backdoor created by a computer virus or trojan. This tactic has the advantage of potentially subjecting multiple computers to surveillance. Viruses often spread to thousands or millions of computers and leave "backdoors" which are accessible over a network connection, enabling an intruder to remotely install software and execute commands. These viruses and trojans are even sometimes developed by government agencies, such as CIPAV and Magic Lantern. In most cases viruses created by other people or spyware installed by non-governmental agencies can be used to gain access through the security breaches that they create.

Another method is "cracking" into the computer to gain access over a network. An attacker can then install surveillance software remotely. Servers and computers with permanent broadband connections are most vulnerable to this type of attack.

Another source of security cracking is employees giving out information or users using brute force tactics to guess their password. Nowadays, security analysis surveys demonstrate that in 80% of the cases, passwords divulgation, and opened doors by negligence are the causes of intrusion in the networks.

One can also physically place surveillance software on a computer by gaining entry to the place where the computer is stored and install it from a compact disc, floppy disk, or thumb drive. This method shares a disadvantage with hardware devices in that it requires physical access to the computer. One well-known worm that uses this method of spreading itself is Stuxnet. If this method looks difficult, it is actually simpler than it looks because some networks suppose that a number of servers are de-localized from the main Network Operation Centers, in some regional offices with no specific access protection.

5. Big Data and Network users’ control

Understanding how Internet services are used and how they are operating is critical to people lives. Network Traffic Monitoring and Analysis (NTMA) is central to that task. Applications range from providing a view on network traffic to the detection of anomalies and unknown attacks while feeding systems responsible for usage monitoring and accounting. They collect the historical data needed to support traffic engineering and troubleshooting, helping to plan the network evolution and identify the root cause of problems. It is correct to say that NTMA applications are a cornerstone to guarantee that the services supporting our daily lives are always available and operating as expected.

Traffic monitoring and analysis is a complicated task. The massive traffic volumes, the speed of transmission systems, the natural evolution of services and attacks, and the variety of data sources and methods to acquire measurements are just some of the challenges faced by NTMA applications. As the complexity of the network continues to increase, more observation points become available to researchers, potentially allowing heterogeneous data to be collected and evaluated.

This trend makes it hard to design scalable and distributed applications and calls for efficient mechanisms for online analysis of large streams of measurements. More than that, as storage prices decrease, it becomes possible to create massive historical datasets for retrospective analysis.

These challenges are precisely the characteristics associated with what, more recently, have become known as big data, i.e., situations in which the data volume, velocity, veracity and variety are the key challenges to allow the extraction of value from the data.

Indeed, traffic monitoring and analysis were one of the first examples of big data sources to emerge, and it poses big data challenges more than ever. It is thus not a surprise that researchers are resorting to big data technologies to support NTMA applications.

Distributed file systems – e.g., the Hadoop1; Distributed File System (HDFS), big data platforms – e.g., Hadoop and Spark, and distributed machine learning and graph processing engines – e.g., MLlib and Apache Giraph, are some examples of technologies that are assisting applications to handle datasets that otherwise would be intractable.

Ultimately, by cataloging how challenges on NTMA have been faced with big data approaches, it is possible to highlight open issues and promising research directions.

a. Big data in two words

Those relying on attributive definitions describe big data by the salient features of both the data and the process of analyzing the data itself. They advocate what is nowadays widely known by the big data “5 V’s” – e.g., volume, velocity, variety, veracity, value.

The knowledge discovery in Big Data as shown in the figure is classically divided in three groups of operation: Input, Analysis, and Output.

Data input performs the data management process, from the collection of raw data to the delivery of data in suitable formats for subsequent mining. It includes pre-processing, which are the initial steps to prepare the data, with the integration of heterogeneous sources and cleaning of spurious data.

Data analysis methods receive the prepared data and extract information, i.e., models for classification, hidden patterns, relations, rules, etc. The methods range from statistical modeling and analysis to machine learning and data mining algorithms.

Data output completes the process by converting information into knowledge. It includes steps to measure the information quality, to display information in succinct formats, and to assist analysts with the interpretation of results.

b. Big Data programming models and platforms

The four types of big data processing models are:

(i) General purpose – platforms to process big data that make little assumptions about the data characteristics and the executed algorithms,

(ii) SQL-like – platforms focusing on scalable processing of structured and tabular data,

(iii) Graph processing – platforms focusing on the processing of large graphs,

(iv) Stream processing – platforms dealing large-scale data that continuously arrive to the system in a streaming fashion.

The figure below depicts this taxonomy with examples of systems.

MapReduce, Dryad, Flink, and Spark belong to the first type. Hive, HAWQ, Apache Drill, and Tajo belong to the SQL-like type. Pregel, GraphLab follow the graph processing models and, finally, Storm and S4 are examples of the latter.

A comprehensive review of big data programming models and platforms is far beyond the scope of this paper.

The Hadoop ecosystem: Hadoop is the most widespread solution among the general-purpose big data platforms. Given its importance, some details about its components are provided in the figure considering Hadoop v2.0 and Spark.

Hadoop v2.0 consists of the Hadoop kernel, MapReduce and the Hadoop Distributed File System (HDFS). YARN is the default resource manager, providing access to cluster resources to several competing jobs. Other resource managers (e.g., Mesos) and execution engines (e.g., TEZ) can be used too, e.g., for providing resources to Spark.

Spark has been introduced in Hadoop v2.0 onward aiming to solve limitations in the MapReduce paradigm. Spark is based on data representations that can be transformed into multiple steps while efficiently residing in memory. In contrast, the MapReduce paradigm relies on basic operations (i.e., map/reduce) that are applied to data batches read and stored to disk. Spark has gained momentum in non-batch scenarios, e.g, iterative and real-time big data applications, as well as in batch applications that cannot be solved in a few stages of map/reduce operations.

Several high-level language and systems, such as Google’s Sawzall, Yahoo’s Pig Latin, Facebook’s Hive, and Microsoft’s SCOPE have been proposed to run on top of Hadoop. Moreover, several libraries such as Mahout over MapReduce and MLlib over Spark have been introduced by the community to solve problems or fill gaps in the original Hadoop ecosystem.

Finally, the ecosystem has been complemented with tools targeting specific processing models, such as GraphX and Spark Streaming, which support graph and stream processing, respectively.

Besides the Apache Hadoop distributions, proprietary platforms offer different features for data processing and cluster management. Some of such solutions include Cloudera CDH,2 Hortonworks HDP,3 and MapR Converged Data Platform.

To resume the above, it is important to note that the diversity of the uses cases leads to a large variety of application platforms, that the open source languages are permanently in evolution, and that a Big Data use case is firstly a project organization with a variety of specialists enabling to define correctly the project, to slice it according to the four groups of big data, then deciding on the type of platforms and languages, giving to an architect the role of defining the data processes, to programmers the role of developing the big data routines, data scientists to work on algorithms, testers to develop the protocols to check the strength of the programs, data analysts to check that the output is compliant with the expected type of results.

6. Social network analysis

In this domain, it is probably important to refer to US agencies, as most of the Worldwide spreading Social Networks such as Facebook, Instagram, Twitter, Tik-Tok

One common form of surveillance is to create maps of social networks based on data from social networking sites as well as from traffic analysis information from phone call records such as those in the N.S.A. call database, and internet traffic data gathered under CALEA. In the increasingly popular language of network theory, individuals are "nodes," and relationships and interactions form the "links" binding them together; by mapping those connections, network scientists try to expose patterns that might not otherwise be apparent. Researchers are applying newly devised algorithms to data lakes. These social network "maps" are then data mined to extract useful information such as personal interests, friendships and affiliations, wants, beliefs, thoughts, and activities. Given that the N.S.A. intercepted in 2006 some 650 million communications worldwide every day, it's not surprising that its analysts focus on a question well suited to network theory: whom should we listen to in the first place? Metadata analysis and link analysis tools are the primary level nowadays to filter that individuals onto which the surveillance would focus.

Many U.S. government agencies have been investing heavily in research involving social network analysis. The intelligence community believes that the biggest threat to the U.S. comes from decentralized, leaderless, geographically dispersed groups. These types of threats are most easily countered by finding important nodes in the network and removing them. To do this requires a detailed map of the network.

Some algorithms program can extend techniques of social network analysis to assist with distinguishing potential terrorist cells from legitimate groups of people ... To be successful such algorithm routine will require information on the social interactions of most of the people around the globe. Since a Defense Department cannot easily distinguish between peaceful citizens and terrorists, it will be necessary for them to gather data on innocent civilians as well as on potential terrorists.

Governments are increasingly purchasing sophisticated technology to monitor their citizens’ behavior on social media. Once the preserve of the world’s foremost intelligence agencies, this form of mass surveillance has made its way to a range of countries, from major authoritarian powers to smaller or poorer states that nevertheless hope to track dissidents and persecuted minorities. The booming commercial market for social media surveillance has lowered the cost of entry not only for the security services of dictatorships, but also for national and local law enforcement agencies in democracies, where it is being used with little oversight or accountability. Coupled with an alarming rise in the number of countries where social media users have been arrested for their legitimate online activities, the growing employment of social media surveillance threatens to squeeze the space for civic activism on digital platforms.

7. Social Media means of surveillance.

a. A shift to machine-driven monitoring of the public

Social media control refers to the collection and processing of personal data pulled from digital communication platforms, often through automated technology that allows for real-time aggregation, organization, and analysis of large amounts of metadata and content. Broader in scope than spyware, which intercepts communications by targeting specific individuals’ devices, social media surveillance cannot be dismissed as less invasive. Billions of people around the world use these digital platforms to communicate with loved ones, connect with friends and associates, and express their political, social, and religious beliefs. Even when it concerns individuals who seldom interact with such services, the information that is collected, generated, and inferred about them holds tremendous value not only for advertisers, but increasingly for law enforcement and intelligence agencies as well.

Governments have long employed people to monitor speech on social media, including by creating fraudulent accounts to connect with real-life users and gain access to networks. Authorities in Iran have boasted of a 42,000-strong army of volunteers who monitor online speech. Any citizen can report for duty on the Cyber Police (FATA) website. Similarly, the ruling Communist Party in China has recruited thousands of individuals to sift through the internet and report problematic content and accounts to authorities.

Advances in artificial intelligence (AI) have opened new possibilities for automated mass surveillance. Sophisticated monitoring systems can quickly map users’ relationships through link analysis; assign a meaning or attitude to their social media posts using natural-language processing and sentiment analysis; and infer their past, present, or future locations. Machine learning enables these systems to find patterns that may be invisible to humans, while deep neural networks can identify and suggest whole new categories of patterns for further investigation. Whether accurate or inaccurate, the conclusions made about an individual can have serious repercussions, particularly in countries where one’s political views, social interactions, sexual orientation, or religious faith can lead to closer scrutiny and outright punishment.

b. The global market for surveillance

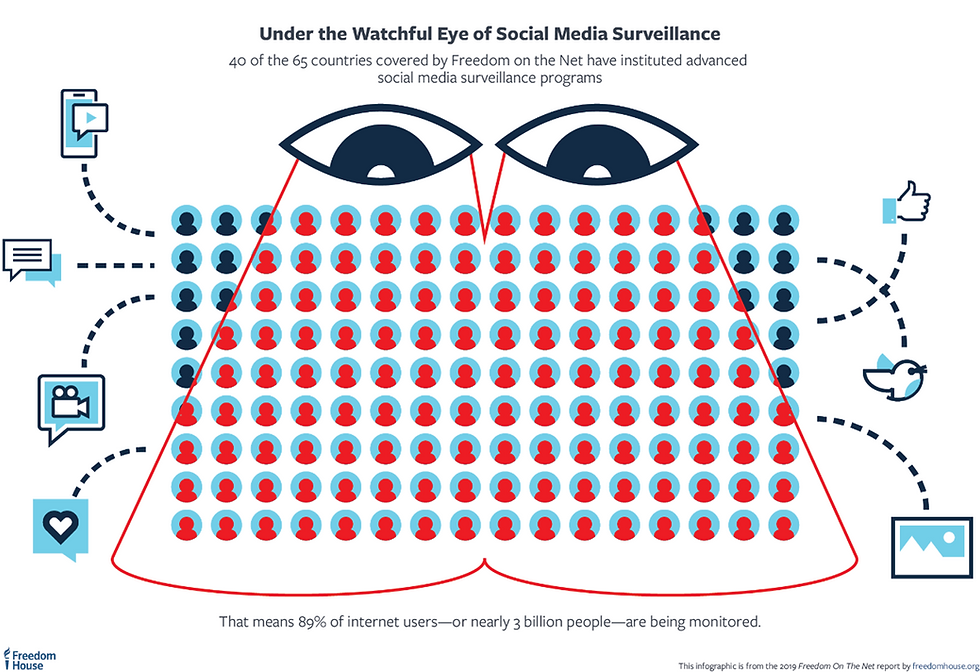

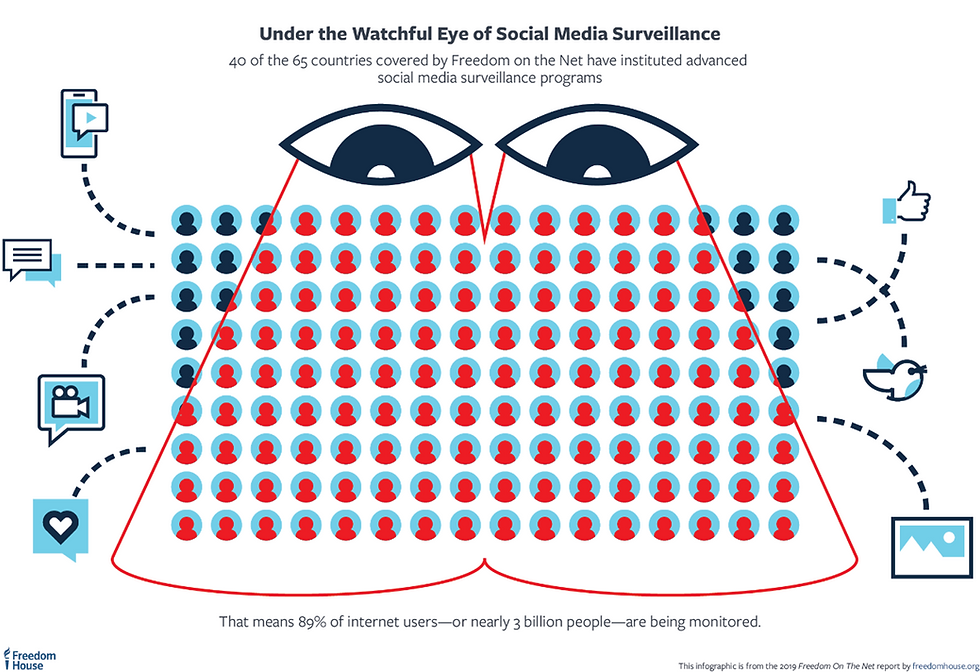

The market for social media surveillance has grown, giving intelligence and law enforcement agencies new tools for combing through massive amounts of information. At least 40 of the 65 countries covered by this report have instituted advanced social media monitoring programs. Moreover, their use by governments is accelerating: in 15 of these countries, it was only in the past year that such programs were either expanded or newly established. Justifying their efforts in the name of enhancing security, limiting disinformation, and ensuring public order, governments have effectively co-opted social media platforms. While these platforms typically present themselves as social connectors and community builders, state agencies in repressive countries see them as vast storehouses of speech and personal information that can be observed, collected, and analyzed to detect and suppress dissent.

China is a leader in developing, employing, and exporting social media surveillance tools. The Chinese firm Semptian has touted its Aegis surveillance system as providing “a full view to the virtual world” with the capacity to “store and analyze unlimited data.” The company claims to be monitoring over 200 million individuals in China—a quarter of the country’s internet users. The company even markets a “national firewall” product, mimicking the so-called Great Firewall that controls internet traffic in China. A complex web of regulations gives the Chinese state access to user content and metadata, allowing authorities to more easily identify and reprimand users who share sensitive content. Some countries in Asia, such as Bangladesh or Philippines, are developing their social media surveillance capabilities in close cooperation with US authorities.

The Middle East and North Africa region is also a booming market for social media surveillance. Companies scheduled to attend a Dubai trade show in 2020 represent countries including China, India, Israel, Italy, the United States, and the United Kingdom. A Chinese company whose clients reportedly include the Chinese military and government bodies, will hold live demonstrations on how to “monitor your targets’ messages, profiles, locations, behaviors, relationships, and more,” and how to “monitor public opinion for election.” Semptian, which has clients in the region, has a price range of $1.5 million to $2.5 million for monitoring the online activities of a population of five million people—an affordable price for most concerned governments.

Russia has used sophisticated social media surveillance tools for many years. The government issued three tenders in 2012 for the development of research methods related to “social networks intelligence,” “tacit control on the internet,” and “a special software package for the automated dissemination of information in large social networks,” foreshadowing how intelligence agencies would eventually master the manipulation of social media at home and abroad. This May, authorities released a tender for technology to collect, analyze, and conduct sentiment analysis on social media content relating to President Vladimir Putin and other topics of interest to the government.

Monitoring projects are under way in Africa as well. The government of Nigeria allocated 2.2 billion naira ($6.6 million) in its 2018 budget for a “Social Media Mining Suite,” having already ordered the military to watch for antigovernment content online. Israeli firms Verint and WebIntPro have reportedly sold similar surveillance software to Angola and Kenya, respectively.

c. The tools use abuses even in strong democracies

The social media surveillance tools that have appeared in democracies got their start on foreign battlefields and in counterterrorism settings, designed to monitor acute security threats in places like Syria. While authorities in the past typically justified the use of these tools with the need to combat serious crimes such as terrorism, child sexual abuse, and large-scale narcotics trafficking, law enforcement and other agencies at the local, state, and federal levels are increasingly repurposing them for more questionable practices, such as screening travelers for their political views, tracking students’ behavior, or monitoring activists and protesters. This expansion makes oversight of surveillance policies more difficult and raises the risk that constitutionally protected activities will be impaired.

These searches have become part of the government’s drive toward big data surveillance. The resulting information is frequently deposited in massive multiagency databases where it can be combined with public records, secret intelligence materials, and datasets (including social media data) assembled by private companies. In one case, ICE paid the data analytics company Palantir $42.3 million for a one-year contract related to FALCON, a custom-built database management tool. Its “Search and Analysis System” enables agents to analyze trends and establish links between individuals based on information gathered during border searches, purchased from private data brokers, and obtained from other intelligence collection exercises. Similar tools developed by Palantir are used by some 300 police departments in the state of California alone, as well as by police forces in Chicago, Los Angeles, New Orleans, and New York City. Many of these programs are facilitated through DHS and its Regional Intelligence Centers.

d. The risks for the populations and government popularity.

3. What T.I.P.S.A.C. Co Ltd offer?

· Consultancy services in relation with the setup of the right regulations toward the ICT’s operators as well as major social networks obligations.

· Assistance in the writing of the specifications leading to a BID process for VoIP network and social networks surveillance,

· Audit of the existing intranet security for the department or agency in charge of the operation, fixed or mobile, to avoid potential hacking or information leaks,

· Assistance in the technical selection grade of the best bid company to implement the surveillance projects, keeping in mind for sure all aspects such as financial, but political as well considering the sensibility of the matter.

T.I.P.S.A.C. Co Ltdare a group of experts, independent from any supplier, neutral in their decisions, with strong ethic rules respecting all cultures, type of governments, religions, and local laws.

Our experts and partners would adhere to the non-disclosing charts, due diligence processes, that such type of project would impose.

Do not hesitate to contact us for your surveillance and security project!

Comments